-

The contrast between Welsh and English

streamable.com Watch https://www.tiktok.com/@oliversoxford/video/7344427037815344417 | StreamableWatch "https://www.tiktok.com/@oliversoxford/video/7344427037815344417" on Streamable.

-

Mixtral 8x7B can process a 32K token context window and works in French, German, Spanish, Italian, and English.

arstechnica.com Everybody’s talking about Mistral, an upstart French challenger to OpenAI"Mixture of experts" Mixtral 8x7B helps open-weights AI punch above its weight class.

On Monday, Mistral AI announced a new AI language model called Mixtral 8x7B, a "mixture of experts" (MoE) model with open weights that reportedly truly matches OpenAI's GPT-3.5 in performance—an achievement that has been claimed by others in the past but is being taken seriously by AI heavyweights such as OpenAI's Andrej Karpathy and Jim Fan. That means we're closer to having a ChatGPT-3.5-level AI assistant that can run freely and locally on our devices, given the right implementation.

Mistral, based in Paris and founded by Arthur Mensch, Guillaume Lample, and Timothée Lacroix, has seen a rapid rise in the AI space recently. It has been quickly raising venture capital to become a sort of French anti-OpenAI, championing smaller models with eye-catching performance. Most notably, Mistral's models run locally with open weights that can be downloaded and used with fewer restrictions than closed AI models from OpenAI, Anthropic, or Google. (In this context "weights" are the computer files that represent a trained neural network.)

Mixtral 8x7B can process a 32K token context window and works in French, German, Spanish, Italian, and English. It works much like ChatGPT in that it can assist with compositional tasks, analyze data, troubleshoot software, and write programs. Mistral claims that it outperforms Meta's much larger LLaMA 2 70B (70 billion parameter) large language model and that it matches or exceeds OpenAI's GPT-3.5 on certain benchmarks, as seen in the chart below.

The speed at which open-weights AI models have caught up with OpenAI's top offering a year ago has taken many by surprise. Pietro Schirano, the founder of EverArt, wrote on X, "Just incredible. I am running Mistral 8x7B instruct at 27 tokens per second, completely locally thanks to @LMStudioAI. A model that scores better than GPT-3.5, locally. Imagine where we will be 1 year from now."

LexicaArt founder Sharif Shameem tweeted, "The Mixtral MoE model genuinely feels like an inflection point — a true GPT-3.5 level model that can run at 30 tokens/sec on an M1. Imagine all the products now possible when inference is 100% free and your data stays on your device." To which Andrej Karpathy replied, "Agree. It feels like the capability / reasoning power has made major strides, lagging behind is more the UI/UX of the whole thing, maybe some tool use finetuning, maybe some RAG databases, etc."

Mixture of experts

So what does mixture of experts mean? As this excellent Hugging Face guide explains, it refers to a machine-learning model architecture where a gate network routes input data to different specialized neural network components, known as "experts," for processing. The advantage of this is that it enables more efficient and scalable model training and inference, as only a subset of experts are activated for each input, reducing the computational load compared to monolithic models with equivalent parameter counts.

In layperson's terms, a MoE is like having a team of specialized workers (the "experts") in a factory, where a smart system (the "gate network") decides which worker is best suited to handle each specific task. This setup makes the whole process more efficient and faster, as each task is done by an expert in that area, and not every worker needs to be involved in every task, unlike in a traditional factory where every worker might have to do a bit of everything.

OpenAI has been rumored to use a MoE system with GPT-4, accounting for some of its performance. In the case of Mixtral 8x7B, the name implies that the model is a mixture of eight 7 billion-parameter neural networks, but as Karpathy pointed out in a tweet, the name is slightly misleading because, "it is not all 7B params that are being 8x'd, only the FeedForward blocks in the Transformer are 8x'd, everything else stays the same. Hence also why total number of params is not 56B but only 46.7B."

Mixtral is not the first "open" mixture of experts model, but it is notable for its relatively small size in parameter count and performance. It's out now, available on Hugging Face and BitTorrent under the Apache 2.0 license. People have been running it locally using an app called LM Studio. Also, Mistral began offering beta access to an API for three levels of Mistral models on Monday.

- markets.businessinsider.com ThinkCERCA Unveils New Science of Reading Aligned, Foundational Reading & Linguistics Course for Grades 6-12

CHICAGO, Nov. 17, 2023 /PRNewswire/ -- ThinkCERCA, a recognized industry leader in student reading and writing growth, recently launched their new...

The key to ThinkCERCA's approach is the incorporation of linguistic principles.

Designed to provide adolescent students with instruction related to phonetic concepts and decoding, the program follows a science of reading approach in providing systematic and explicit instruction to fill in foundational gaps that secondary struggling readers will not close on their own or in a Language Arts classroom.

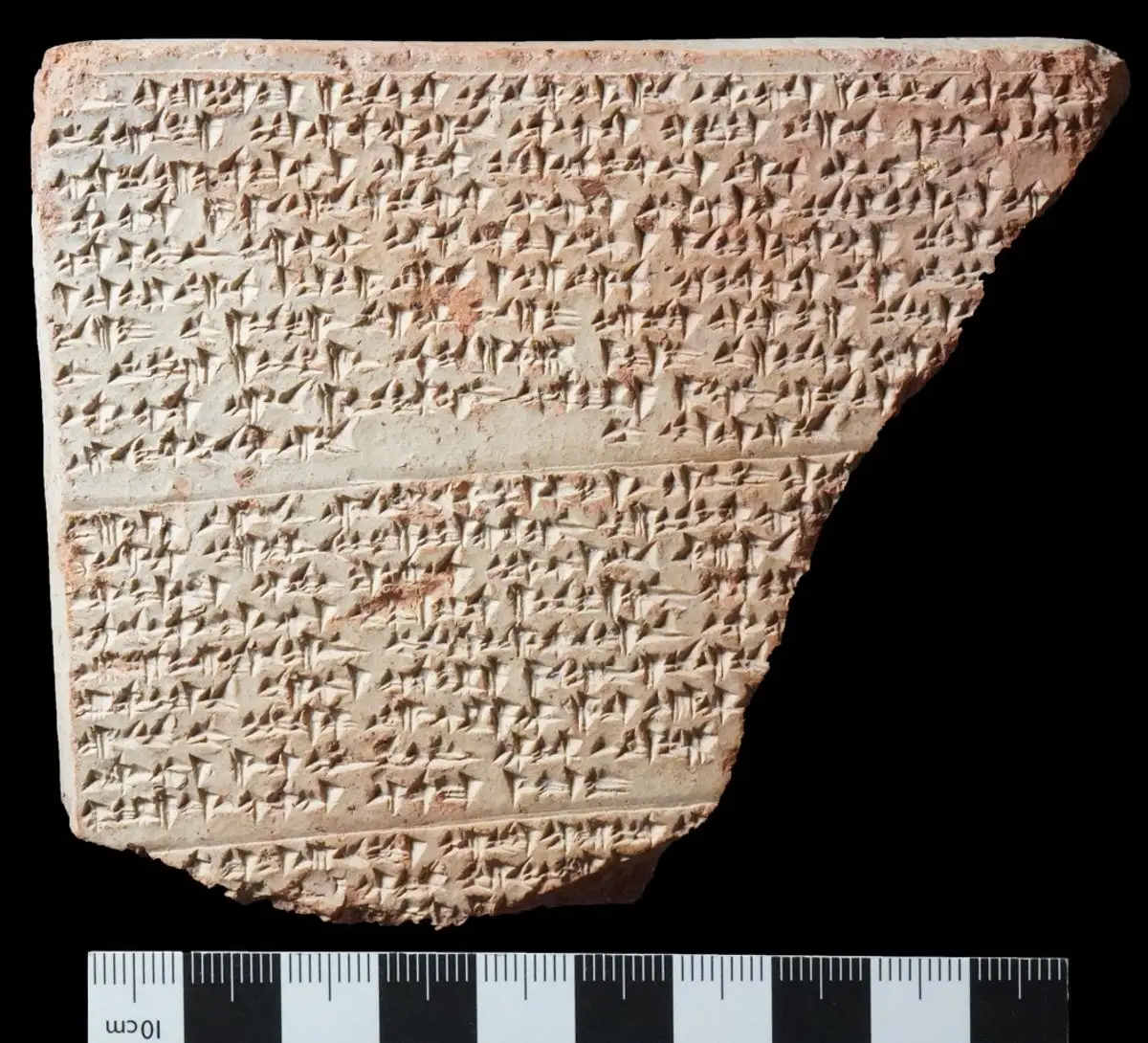

- news.yahoo.com Archaeologists discover previously unknown ancient language

Discovery helps reveal how a long-lost empire used multiculturalism to achieve political stability

- yaledailynews.com American Sign Language now Yale’s third most popular language - Yale Daily News

Since it was first offered in spring 2018, American Sign Language at Yale has grown to be the third largest language option, with over 200 students enrolled in ASL classes this semester.

-

I made an IPA keyboard for fcitx on Linux!

Video

Click to view this content.

OP: @yukijoou:

> > > For those who may not know, the IPA (international phonetics alphabet) is widely used for writing out how words are spoken. It’s very useful for linguists writing research papers, and for people looking to learn new languages! As I wasn’t satisfied with most IPA keyboards available, and wanted something that integrated well with fcitx, which I already have to use for japanese input, I re-implemented parts of the SIL IPA keyboard. It’s not a one-to-one recreation (yet), because I needed somthing now rather than later, and took some shortcuts to put in all the features I personally needed, but it should be good enough for doing broad transcription of RP English. It should also be fairly trivial to hack in support for most character combinations. Feel free to check out the git repo! > >

-

How can a word be its own opposite?

streamable.com https://www.bbc.co.uk/iplayer/episode/b09j0h8z/qi-series-o-7-opposites?seriesId=b00ttsl6Watch "https://www.bbc.co.uk/iplayer/episode/b09j0h8z/qi-series-o-7-opposites?seriesId=b00ttsl6" on Streamable.

- phys.org Researcher investigates undocumented prehistoric languages through irregularities in current languages

Language can be a time machine—we can learn from ancient texts how our ancestors interacted with the world around them. But can language also teach us something about people whose language has been lost? Ph.D. candidate Anthony Jakob investigated whether the languages of prehistoric populations left...

- daily.jstor.org Black English Matters - JSTOR Daily

People who criticize African American Vernacular English don't see that it shares grammatical structures with more "prestigious" languages.

People who criticize African American Vernacular English don't see that it shares grammatical structures with more "prestigious" languages.

https://cdn.discordapp.com/attachments/939724110316056608/939724492333273109/unknown.png

Strength is in its innovation and playfulness with language.

https://cdn.discordapp.com/attachments/939724110316056608/939724968105738250/unknown.png

-

Universality and diversity in human song

From love songs to lullabies, songs spanning the globe—despite their diversity—exhibit universal patterns, according to the first comprehensive scientific analysis of the similarities and differences of music across societies around the world.

-

Linguists have identified a new English dialect that's emerging in South Florida

kbin.social Linguists have identified a new English dialect that's emerging in South Florida - News - kbin.social"We got down from the car and went inside."

Crosspost from m/news

- www.scientificamerican.com This Ancient Language Has the Only Grammar Based Entirely on the Human Body

An endangered language family suggests that early humans used their bodies as a model for reality

-

The physics of languages

physicsworld.com The physics of languages – Physics WorldMarco Patriarca, Els Heinsalu and David Sánchez explain how physics has spread into the field of linguistics

Saw this article and thought it was pretty interesting! I've seen some articles about brownian motion and language evolution in the past, but this article brings in even more concepts