![Smorty [she/her]](https://lemmy.blahaj.zone/pictrs/image/1523b604-f27a-49ef-802e-e073b33d65cf.webm?format=webp&thumbnail=96)

I'm a person who tends to program stuff in Godot and also likes to look at clouds. Sometimes they look really spicy outside.

Such a good song. I heard it last time for like three months after kissmas, unironically.

Its a good one <3

Shuddup Shuddup Shuddup you're a girl, you're pretty and still complaining?...

Honest question to y'all windows users:

Do you have fun with your operating system?

GARN! I THINK...

GDShader: What's worse? if statement or texture sample?

I have heard many times that if statements in shaders slow down the gpu massively. But I also heard that texture samples are very expensive.

Which one is more endurable? Which one is less impactful?

I am asking, because I need to decide on if I should multiply a value by 0, or put an if statement.

No no no, don't feel lurked at or weirdly idealized!!

People like myself really do look up to you, because you seem kinda reasonably okay.

As in, you are someone with a state worth striving for.

I really hope I don't make you uncomfy, since that would be the last thing I want. <3

Really... I didn't know, does it make you uncomfortable?...

I don't really know, but I like to imagine you as girlboss <3

okay fine...

sigh

Good Day.

Damn he got tools in his pockets.

Kommentar hinzufügen

sigh

Guten Tag.

That's the best type of social (media)

See? Bigotry, evil queer-phobic cishets, unnecessarily evil blogs?

- gone - !

She must be so comfy under big cat like under weighted blanket <3

My good quora is SOOO full of bots these days.

Once these not accounts figure out how to set a system prompt, it's over<|im_end|><|im_end|><|im_end|><|im_end|>

nomnomnom- nomnom - nommmm nomnom Nomnom

notices nose

noms nose

nomnomnomnom <3 :3

Does Blahaj Lemmy support ActivityPub? I'm trying to log in to PeerTube

Hii <3 ~

I have not worked with the whole ActivityPub thing yet, so I'd really like to know how the whole thing kinda works.

As far as i can tell, it allows you to use different parts of the fediverse by using just one account.

Does it also allow to create posts on other platforms, or just comments?

I have tried to log in with my smorty@lemmy.blahaj.zone account, and it redirected me correctly to the blahaj lemmy site.

Then I entered my lemmy credentials and logged in. I got logged in to my lemmy correctly, but I also got an error just saying "Could not be accessed" in German.

And now I am still not logged in in PeerTube.

Sooo does blahaj zone support the ActivityPub, or is this incorrectly "kinda working" but not really?

Is Lemmy your "main social media app"? If not, which one is it?

I have fully transitioned to using Lemmy and Mastodon right when third party apps weren't allowed on Spez's place anymore, so I don't know how it is over there anymore.

What do you use? Are you still switching between the two, essentially dualbooting?

What other social media do you use? How do you feel about Fediverse social media platforms in general?

(I'm sorry if I'm the 100th person to ask this on here...)

We already have Linux at home!

I have to work with Win11 for work and just noticed the lil Tux man in Microsofts Explorer. Likely to connect to WSL.

Apparently now Microsoft wants people to keep using Windows in a really interesting way. By simply integrating it within their own OS!

This way, people don't have to make the super hard and complicated switch to linux, but they get to be lazy, use the preinstalled container and say "See, I use Linux too!".

While this is generally a good thing for people wanting to do things with the OS, it is also a clear sign that they want to make it feel "unneccessary" to switch to Linux, because you already have it!

WSL alone was already a smart move, but this goes one step further. This is a clever push on their side, increasing the barrier to switch even more, since now there is less of a reason to. They are making it too comfortable too stay within Microsofts walls.

On a different note: Should the general GNU/Linux community do the same? Should we integrate easier access to running Winblows apps on GNU/Linux? Currently I still find it too much of a hastle to correctly run Winblows applications, almost always relying on Lutris, Steams proton or Bottles to do the work for me.

I think it would be a game changer to have a double click of an EXE file result in immediate automatic wine configuration for easy and direct use of the software, even if it takes a big to setup.

I might just be some fedora using pleb, but I think having quick and easy access to wine would make many people feel much more comfortable with the switch.

Having a similar system to how Winblows does it, with one container for all your .exe programs would likely be a good start (instead of creating a new C drive and whatever for every program, which seems to be what Lutris and Bottles does).

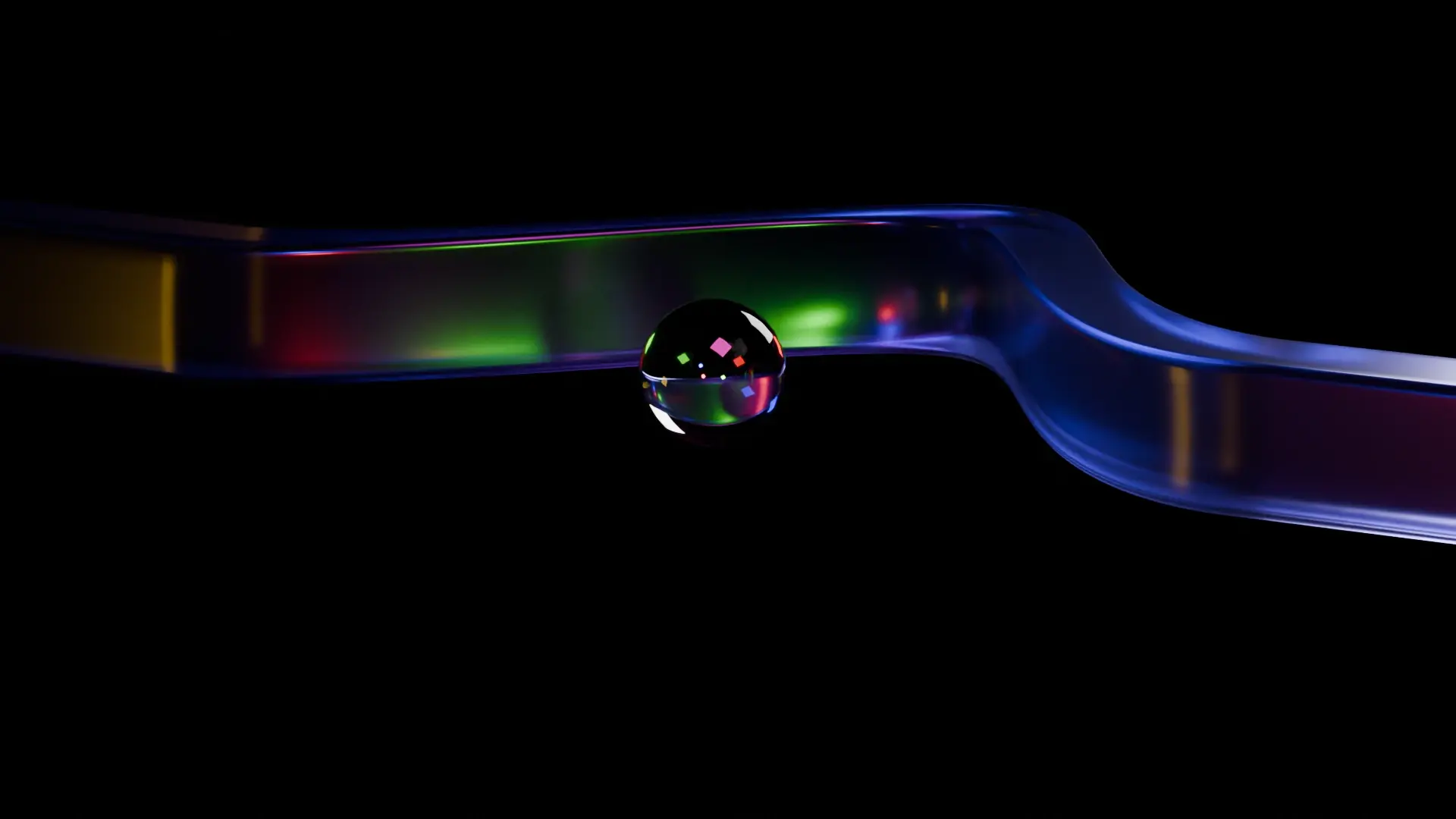

EDIT: Uploaded correct image

How should one treat fill-in-the-middle code completions?

Many code models, like the recent OpenCoder have the functionality to perform fim fill-in-the-middle tasks, similar to Microsofts Githubs Copilot.

You give the model a prefix and a suffix, and it will then try and generate what comes inbetween the two, hoping that what it comes up with is useful to the programmer.

I don't understand how we are supposed to treat these generations.

Qwen Coder (1.5B and 7B) for example likes to first generate the completion, and then it rewrites what is in the suffix. Sometimes it adds three entire new functions out of nothing, which doesn't even have anything to do with the script itself.

With both Qwen Coder and OpenCoder I have found, that if you put only whitespace as the suffix (the part which comes after your cursor essentially), the model generates a normal chat response with markdown and everything.

This is some weird behaviour. I might have to put some fake code as the suffix to get some actually useful code completions.

Watching cool transfems and cis-fems online or in shows makes me sad now so I'll stop

[Requesting Engagement from transfems]

(Blahaj lemmy told me to put this up top, so I did)

I did not expect this to happen. I followed FairyPrincessLucy for a long time, cuz she's real nice and seems cool.

Time passes and I noticed how I would feel very bad when watching her do stuff. I was like > damn, she so generally okay with her situation. Wish I was too lol

So I stopped watching her.

Just now I discovered another channel, Melody Nosurname , and I really, really like her videos! She seems very reasonable and her little character is super cute <3 But here too I noticed how watching the vids made me super uncomfortable. The representation is nice, for sure, and her videos are of very high quality, I can only recommend them (as in - the videos).

I started by noticing > woah, her tshirt is super cute, I wanna have that too!

Then I continue with > heyo her friend here seems also super cool. Damn wish I had cool friends

And then eventually the classic > damn, I wish I were her

At that point, it's already over. I end up watching another video and, despite my genuine interest in the topic, I stop it in the middle, close the tab and open Lemmy (and here we are).

Finally I end up watching videos by cis men, like Scott the Woz. They are fine, and I end up not comparing myself to them (since I wouldn't necessarily want to be them). I also stopped watching feminine people in general online, as they tend to give me a very similar reaction. Just like > yeah, that's cool that you're mostly fine with yourself, I am genuinely happy for you that you got lucky during random character creation <3

I also watched The Owl House, which is a really good show (unfortunately owned by Disney) and I stopped watching when...

Spoiler for the Owl House

it started getting gay <3 cuz I started feeling way too jealous of them just being fine with themselves and pretty and gay <3 and such

I wanted to see where the show was going, and I'm sure it's real good, but that is not worth risking my wellbeing, I thought.

So anyway...

have you had a period like that before? How did you deal with it? Do you watch transfem people? Please share your favs! <3 I also like watching SimplySnaps. Her videos are also really high quality, I just end up not being able to watch them for too long before sad hits :(

additional info about me, if anyone cares

I currently don't take hrt, but I'm on my way. I'm attending psychological therapy with a really nice tharapist here in Germany. I struggle to find good words to describe how I feel but slowly I find better words for it. I'm currently 19 and present myself mostly masculine still, while trying to act very nice, generally acceptable and friendly. So kinda in a way which makes both super sweet queer people <3 <3 <3 <3 and hetero cis queerphobes accept me as just another character. (I work at a school with very mixed ideologies, so I kinda have to). But oh boi do I have social anxiety, even at home with mother...

EDIT: Changed info about SimplySnaps

EDIT2: Added The Owl House example

Want to make server less heavy, so there a rulefully small image

This one is just 37.05 kilobytes! That's a smol one!

Having trouble to generate correct output? Try prefixes!

I'm just a hobbyist in this topic, but I would like to share my experience with using local LLMs for very specific generative tasks.

Predefined formats

With prefixes, we can essentially add the start of the LLMs response, without it actually generating it. Like, when We want it to Respond with bullet points, we can set the prefix to be - (A dash and space). If we want JSON, we can use ` ` `json\n{ as a prefix, to make it think that it started a JSON markdown code block.

If you want a specific order in which the JSON is written, you can set the prefix to something like this:

plaintext ` ` `json { "first_key":

Translation

Let's say you want to translate a given text. Normally you would prompt a model like this

plaintext Translate this text into German: ` ` `plaintext [The text here] ` ` ` Respond with only the translation!

Or maybe you would instruct it to respond using JSON, which may work a bit better. But what if it gets the JSON key wrong? What if it adds a little ramble infront or after the translation? That's where prefixes come in!

You can leave the promopt exactly as is, maybe instructing it to respond in JSON

plaintext Respond in this JSON format: {"translation":"Your translation here"}

Now, you can pretend that the LLM already responded with part of the message, which I will call a prefix. The prefix for this specific usecase could be this:

plaintext { "translation":"

Now the model thinks that it already wrote these tokens, and it will continue the message from right where it thinks it left off.

The LLM might generate something like this:

plaintext Es ist ein wunderbarer Tag!" }

To get the complete message, simply combine the prefix and the generated text to result in this:

plaintext { "translation":"Es ist ein wunderschöner Tag!" }

To minimize inference costs, you can add "} and "\n} as stop tokens, to stop the generation right after it finished the json entrie.

Code completion and generation

What if you have an LLM which didn't train on code completion tokens? We can get a similar effect to the trained tokens using an instruction and a prefix!

The prompt might be something like this

plaintext ` ` `python [the code here] ` ` ` Look at the given code and continue it in a sensible and reasonable way. For example, if I started writing an if statement, determine if an else statement makes sense, and add that.

And the prefix would then be the start of a code block and the given code like this

plaintext ` ` `python [the code here]

This way, the LLM thinks it already rewrote everything you did, but it will now try to complete what it has written. We can then add \n` ` ` as a stop token to make it only generate code and nothing else.

This approach for code generation may be more desireable, as we can tune its completion using the prompt, like telling it to use certain code conventions.

Simply giving the model a prefix of ` ` `python\n Makes it start generating code immediately, without any preamble. Again, adding the stop keyword \n` ` ` makes sure that no postamble is generated.

Using this in ollama

Using this "technique" in ollama is very simple, but you must use the /api/chat endpoint and cannot use /api/generate. Simply append the start of a message to the conversation passed to the model like this:

json "conversation":[ {"role":"user", "content":"Why is the sky blue?"}, {"role":"assistant", "content":"The sky is blue because of"} ]

It's that simple! Now the model will complete the message with the prefix you gave it as "content".

Be aware!

There is one pitfall I have noticed with this. You have to be aware of what the prefix gets tokenized to. Because we are manually setting the start of the message ourselves, it might not be optimally tokenized. That means, that this might confuse the LLM and generate one too many or few spaces. This is mostly not an issue though, as

What do you think? Have you used prefixes in your generations before?

1 day ruling stew

This post was mischivously stolen from here

EDIT: Completely forgot to adctually add the image. Here you go.

New to blender, really liking the cycles renderer and modifiers. (Using "Warp" modifier here)

I'm trying to learn blender for making tandril studio-like animations (the UI animations usually published by Microsoft when announcing something new like here with Copilot).

I know that's a big goal, but I still wanna do it...

What is it with this externally-managed-environment pip install error?

```bash marty@Marty-PC:~/git/exllama$ pip install numpy error: externally-managed-environment

× This environment is externally managed ╰─> To install Python packages system-wide, try apt install python3-xyz, where xyz is the package you are trying to install.

If you wish to install a non-Debian-packaged Python package, create a virtual environment using python3 -m venv path/to/venv. Then use path/to/venv/bin/python and path/to/venv/bin/pip. Make sure you have python3-full installed.

If you wish to install a non-Debian packaged Python application, it may be easiest to use pipx install xyz, which will manage a virtual environment for you. Make sure you have pipx installed.

See /usr/share/doc/python3.12/README.venv for more information.

note: If you believe this is a mistake, please contact your Python installation or OS distribution provider. You can override this, at the risk of breaking your Python installation or OS, by passing --break-system-packages.

hint: See PEP 668 for the detailed specification.

```

I get this error every time I try install any kind of python package. So far, I always just used the --break-system-packages flag, but that seems, well, rather unsafe and breaking.

To this day, I see newly written guides, specifically for Linux, which don't point out this behaviour. They just say [...] And then install this python package with 'pip install numpy'

Is this something specific to my system, or is this a global thing?

Changing the script editors text without modifying the undo-redo?

Godot Version 4.4 dev 3 Question Is it possible to get the undo redo of the Godot script editor? I am currently inserting text into the sceipt editor via code and that triggers the undo redo to record it. So when the user presses Ctrl-Z, it goes back to what I inserted with code. So essentially, ...

I posted this question on the godot forum, but that has been overrun by bots like crazy, it's all random characters, so probably some AI.

I want to edit the text of the current script editor without modifying the internal undo-redo of the editor.

So essentially I want this to be possible:

- User writes some code

- Program modifies it, maybe imroving the formatting and such...

- Program then reverts to previously written code

- User is able to undo their last code addition, without the programmatically inserted stuff to show up as the last undo-step.

Changing the script editors text without modifying the undo-redo?

Godot Version 4.4 dev 3 Question Is it possible to get the undo redo of the Godot script editor? I am currently inserting text into the sceipt editor via code and that triggers the undo redo to record it. So when the user presses Ctrl-Z, it goes back to what I inserted with code. So essentially, ...

I posted this question on the godot forum, but that has been overrun by bots like crazy, it's all random characters, so probably some AI.

I want to edit the text of the current script editor without modifying the internal undo-redo of the editor.

So essentially I want this to be possible:

- User writes some code

- Program modifies it, maybe imroving the formatting and such...

- Program then reverts to previously written code

- User is able to undo their last code addition, without the programmatically inserted stuff to show up as the last undo-step.

code-completion model (Qwen2.5-coder) rewrites already written code instead of just completing it

Video

Click to view this content.

I am using a code-completion model for !my tool I am making for godot (will be open sourced very soon).

Qwen2.5-coder 1.5b though tends to repeat what has already been written, or change it slightly. (See the video)

Is this intentional? I am passing the prefix and suffix correctly to ollama, so it knows where it currently is. I'm also trimming the amount of lines it can see, so the time-to-first-token isn't too long.

Do you have a recommendation for a better code model, better suited for this?

code-completion model (Qwen2.5-coder) rewrites already written code instead of just completing it

Video

Click to view this content.

I am using a code-completion model for !my tool I am making for godot (will be open sourced very soon).

Qwen2.5-coder 1.5b though tends to repeat what has already been written, or change it slightly. (See the video)

Is this intentional? I am passing the prefix and suffix correctly to ollama, so it knows where it currently is. I'm also trimming the amount of lines it can see, so the time-to-first-token isn't too long.

Do you have a recommendation for a better code model, better suited for this?

No wayland option despite fresh gnome install (Debian 12, testing, nvidia)

This is something I have been stuck on for a while.

I want to use Wayland for that variable refresh rate and some better handeling of screen recordings.

I have tried time and time again to get a wayland session running with the proprietary nvidia driver, but have not gotten there yet.

Only the X11 options are listed on the login screen. When using the fallback FOSS nvidia driver however, all the correct X11 and Wayland options show up (Including Gnome and KDE, both in X11 and Wayland).

Wasn't this fixed, like, about a year ago? I have the "latest" proprietary nvidia driver, but the current debain one is still pretty old (535.183.06).

output from `nvidia-smi`

```bash Sun Oct 27 03:21:06 2024 +---------------------------------------------------------------------------------------+ | NVIDIA-SMI 535.183.06 Driver Version: 535.183.06 CUDA Version: 12.2 | |-----------------------------------------+----------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+======================+======================| | 0 NVIDIA GeForce GTX 1060 6GB Off | 00000000:01:00.0 On | N/A | | 25% 43C P0 25W / 120W | 476MiB / 6144MiB | 0% Default | | | | N/A | +-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=======================================================================================| | 0 N/A N/A 6923 G /usr/lib/xorg/Xorg 143MiB | | 0 N/A N/A 7045 C+G ...libexec/gnome-remote-desktop-daemon 63MiB | | 0 N/A N/A 7096 G /usr/bin/gnome-shell 81MiB | | 0 N/A N/A 7798 G firefox-esr 167MiB | | 0 N/A N/A 7850 G /usr/lib/huiontablet/huiontablet 13MiB | +---------------------------------------------------------------------------------------+ ```

Querying the Godot documentation website? Is that possible?

I want to integrate the online documentation a bit nicer into the editor itself. Is it somehow possible to query that page and get the contents of the searched-for entries?

Best case would be, if we can get the queried site content as JSON, that'd be nice, but very unlikely I think.

What should I use: big model-small quant or small model-no quant?

For about half a year I stuck with using 7B models and got a strong 4 bit quantisation on them, because I had very bad experiences with an old qwen 0.5B model.

But recently I tried running a smaller model like llama3.2 3B with 8bit quant and qwen2.5-1.5B-coder on full 16bit floating point quants, and those performed super good aswell on my 6GB VRAM gpu (gtx1060).

So now I am wondering: Should I pull strong quants of big models, or low quants/raw 16bit fp versions of smaller models?

What are your experiences with strong quants? I saw a video by that technovangelist guy on youtube and he said that sometimes even 2bit quants can be perfectly fine.

UPDATE: Woah I just tried llama3.1 8B Q4 on ollama again, and what a WORLD of difference to a llama3.2 3B 16fp!

The difference is super massive. The 3B and 1B llama3.2 models seem to be mostly good at summarizing text and maybe generating some JSON based on previous input. But the bigger 3.1 8B model can actually be used in a chat environment! It has a good response length (about 3 lines per message) and it doesn't stretch out its answer. It seems like a really good model and I will now use it for more complex tasks.

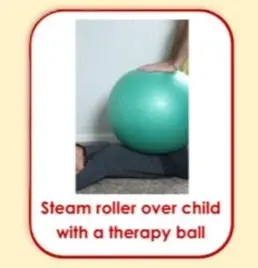

Drive over child with therapy rule

Some image I found when searching for deep pressure on duckduckgo. Never heard of the term before, buuuuuut seems interesting...

Also, from the same image, there is a hotdog

I used to do this as a child, alone..

And now I ask YOU, have you heard of this deep pressure term before? What are your experiences? (Now u be the entertainer! I'm done with pretending!)