-

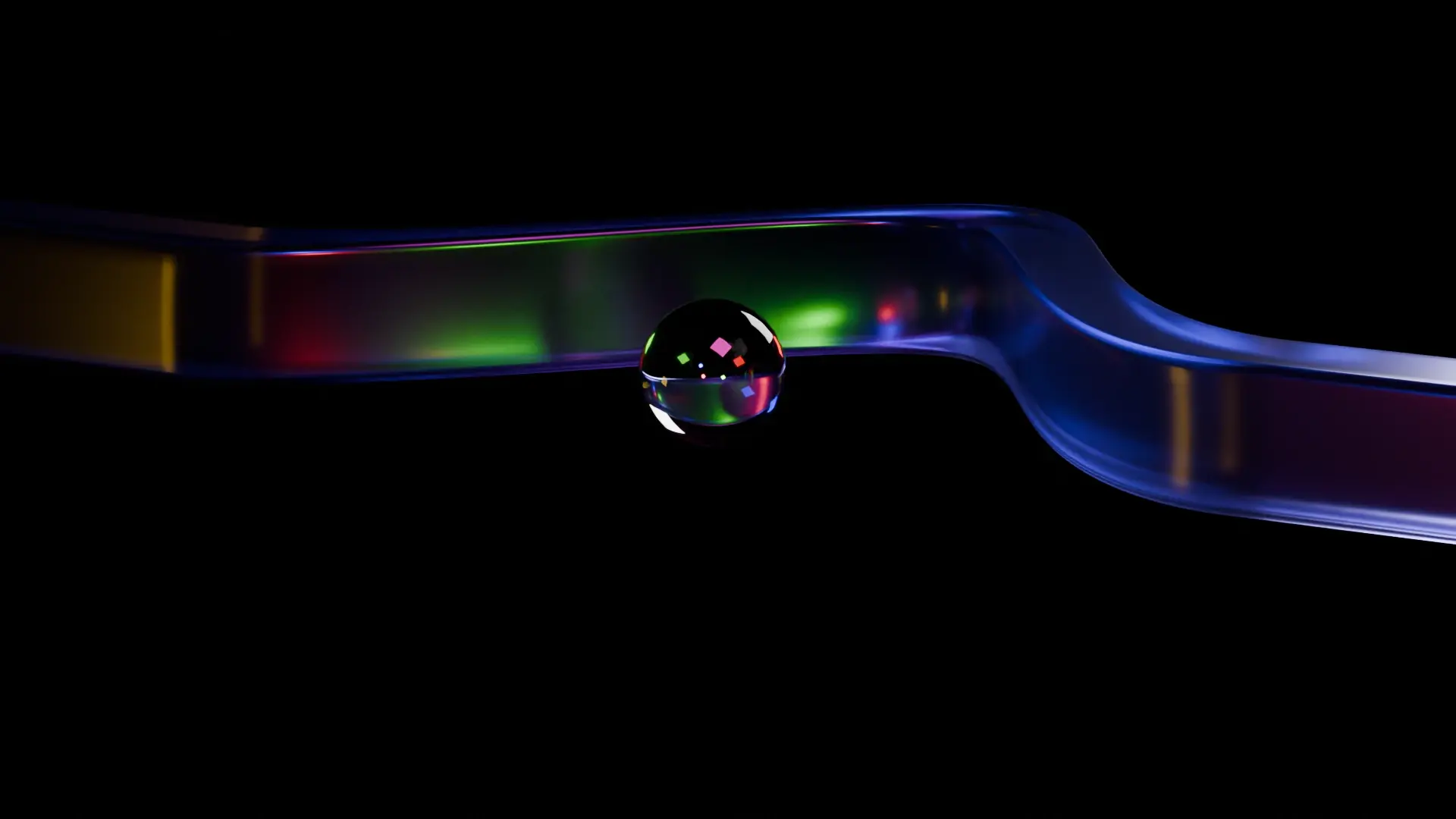

New to blender, really liking the cycles renderer and modifiers. (Using "Warp" modifier here)

I'm trying to learn blender for making tandril studio-like animations (the UI animations usually published by Microsoft when announcing something new like here with Copilot).

I know that's a big goal, but I still wanna do it...

-

How to modify nearest vertices in geometry nodes?

I have an object and points (point cloud). I would like to modify the named attribute of the nearest vertex to each point. How to do this?

I tried using the "sample nearest" node, but I couldn't make a selection out of the output. Then I tried it with the "sample index" node, and it seemed like it was modifying the named attribute for all vertices for each point, or the "sample nearest" node used the source object's location instead of the point cloud.

I would appreciate some help

-

HUGE Blender Add-On Giveaway + An Amazing New 'Must Have' Free Blender Plugin QRemeshify

YouTube Video

Click to view this content.

-

AKIRA in 3D (4k) - YouTube

YouTube Video

Click to view this content.

cross-posted from: https://sopuli.xyz/post/18243654

> Up until I saw the person I couldn't even tell this was 3d which is saying something because the vast majority of 3d trying to emulate 2d I have seen has a weird plastic look to them.

-

The Scrombler: breaking up large tiled textures

This is a simple shader node group that breaks up the visual repetition of tiled textures. It uses a Voronoi texture's cell colors to apply a random translation and/or rotation to an image texture's vector input to produce an irregular pattern.

I primarily made it for landscape materials. The cells' borders are still sharp, so certain materials, like bricks, wood, or fabric, will not look good.

-

Blender Survey 2024

www.blender.org Blender Survey 2024 — blender.orgBlender Foundation announces its first user survey. Fill it in and help shape the future of Blender!

-

How do I replicate this shader and animation style

I recently came across a Japanese animation and love the way it looks and want to replicate the shader style and animation style

-

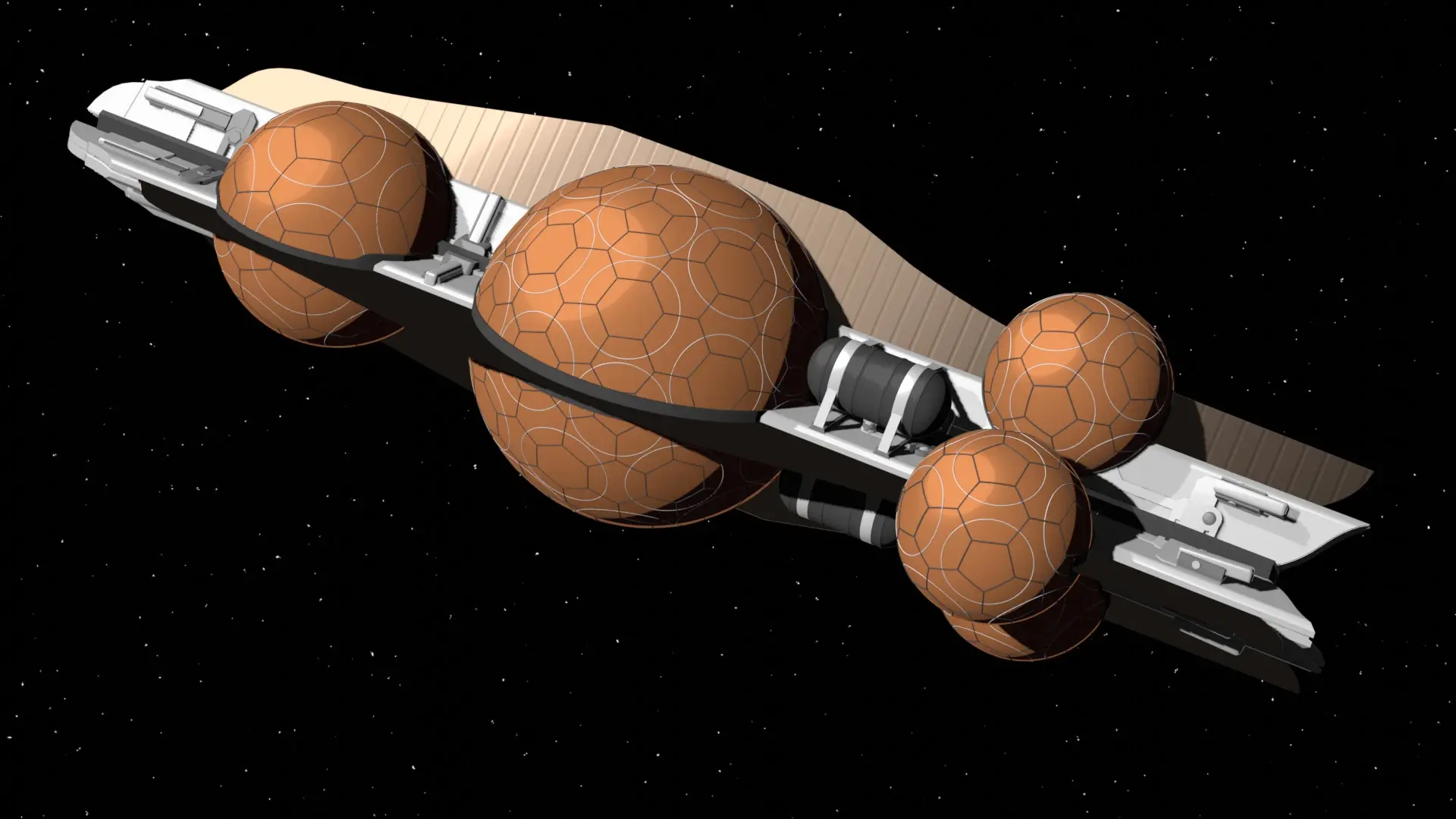

Simple parametric python model

Recently I've been planning on designing some optics, nothing fancy just for a projector system that I'm messing around with. Anyway I got this idea that I could basically model the optics in blender using lux core to simulate the light path as it bounces the mirrors and passes through the lenses.

So I am able to create lenses and parts of any kind O can think of but I would love to be able to control the parts after I've created them via parameters like radius of curvature for example for a mirror.

Is that possible using a python script? Like somehow keep the script that created the geometry somehow linked to the geometry in such a way that I can come back to the script and change it later or maybe even change it using the timeline and key frames?

-

HELP: combining "decals" with alpha in compositor

Hi

So, the thing I want to accomplish is to add .png images, compile them and then transform the compiled montrosity (move/scale, etc).

But the thing is, if I “alphaover” the images with some offset, for example:

the image laid over the other cuts off, as the overlay can’t reach outside the dimensions of the underlaying one.

I know I can just:

- use eg. gimp and combine the images there, but I’d rather have my workflow entirely in blender.

- add transparent padding for ~billion pixels around the decal as a workaround, but that sounds silly and “bruteforcing” the concept.

How would I go about getting all overlaid images to display in full in such case? I’ve tried different options on the “alpha over” and “color mix” -nodes without results, but entirely possible that I just missed some critical combination.

So, thoughts?

-

"A Past Outside Time"

This is a remake of one of my first nature scenes; it's always so satisfying to look back and see how far I've come since then. All made in Blender, rendered with Cycles.

Let me know what you think!

-

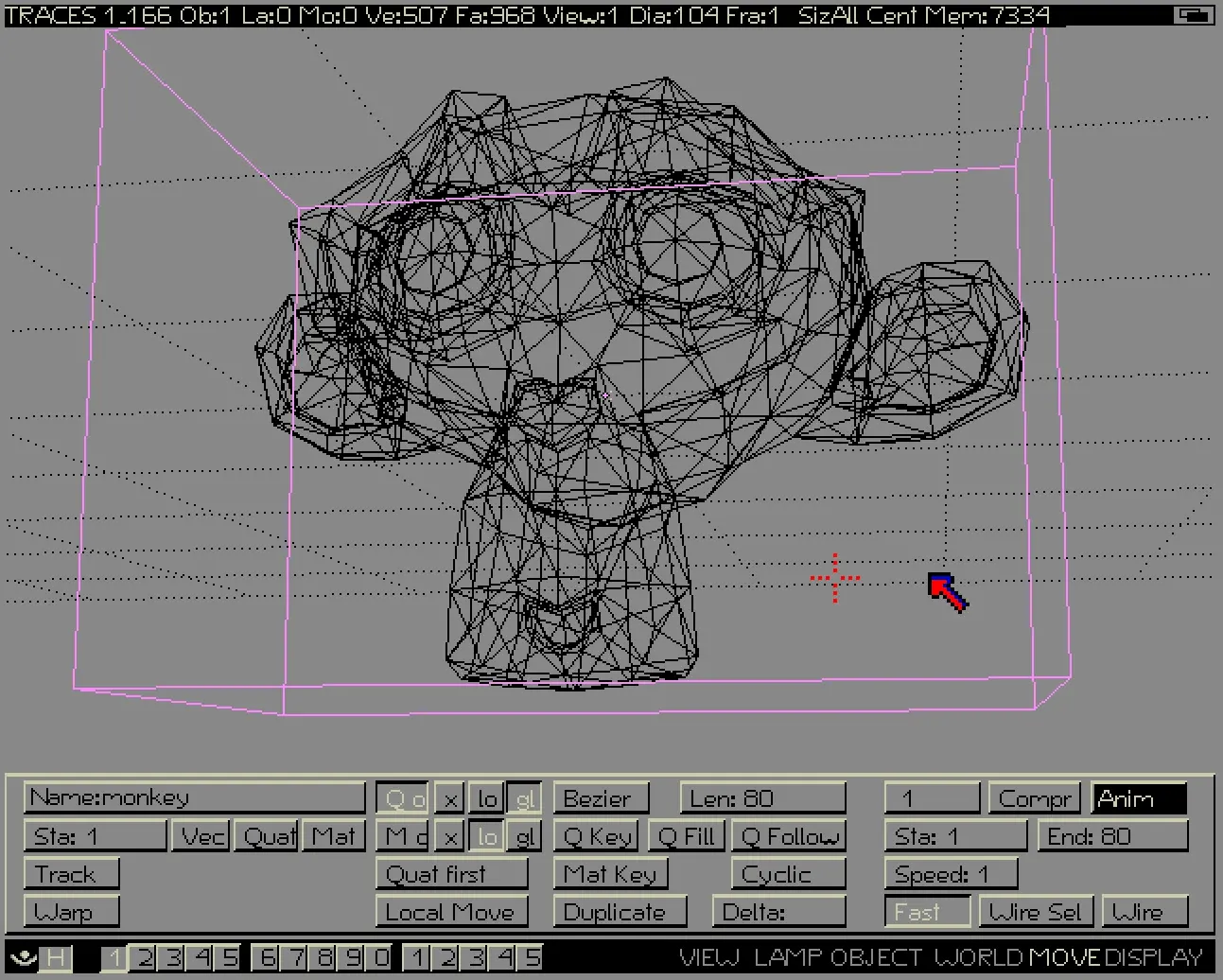

Blender's predecessor (Traces) running on Amiga

cross-posted from: https://sh.itjust.works/post/24674915 > Sourced from this article about Blender's history, which interviews Ton Roosendaal, the creator.

-

NijiGPen Updates v0.8~0.10: Grease Pencil 2D Addon

YouTube Video

Click to view this content.

I personally find this addon quite innovative. To me it looks like a 2d drawing toolset inside of 3d program that is more powerful and has unique 2d drawing features absent in actual state-of-art 2d drawing programs like CSP and Krita!

-

"Backrooms"-ish spoopyhouse

(also; an album: https://imgur.com/a/H4EZCdV - changed the topic link to show the render, for obvious visibility. Album includes node-setups for geonodes and the gist of what I use for most materials)

Hi all, I'm an on/off blender hobbyist, started this "project" as a friend of mine baited me a bit to this, so I went with it. The idea is to make a "music video" of sorts. Gloomy music, camera fly/walkhrough of a spoopy house, all that cheesy stuff.

It started basically with the geonodes (as shown in the imgur album) - basically it's a simple thing that generates walls/floorlists around a floor-mesh and applies given materials to them, nothing fancy but it allows me to quickly prototype the building layout.

The scene uses some assets from blendswap:

- https://blendswap.com/blend/14139 - furniture, redid the materials as they were way too bright for the direction I intended to go. But the modeling on the furniture is top notch, if a bit lowpoly but nothing a subsurf mod. can't fix.

- https://blendswap.com/blend/25115 - the dinner on the table. Also tweaked the material quite a bit, the initial one was way too shiny and lacked SSS.

Thanks to the authors of these blendswaps <3

edit: the images on paintings on the wall are some spooky paintings I found on google image search, but damn it I can't recall the search term to give props. I'm a failure.

The source for the wall/floor textures are lost to time, I've had these like a decade on my stash. Wish I could make these on my own :/

-

Record expression values as keyframes

Good morning,

Hope this is not a stupid question, I am very new to Blender. So, my setup is:

-

3d env built from iPad photogrammetry

-

we insert some lasers (a simple cylinder with emission node)

-

we control the lasers using QLC+ --> artnet --> BlenderDMX and a python expression that modulates the emission color for every laser from a separate dmx channel.

We would now love to be able to store the dmx animation directly in blender as keyframes in order to export the animation and put it back on the iPad for AR simulation. Is there any way to record the driver data in real time?

-

-

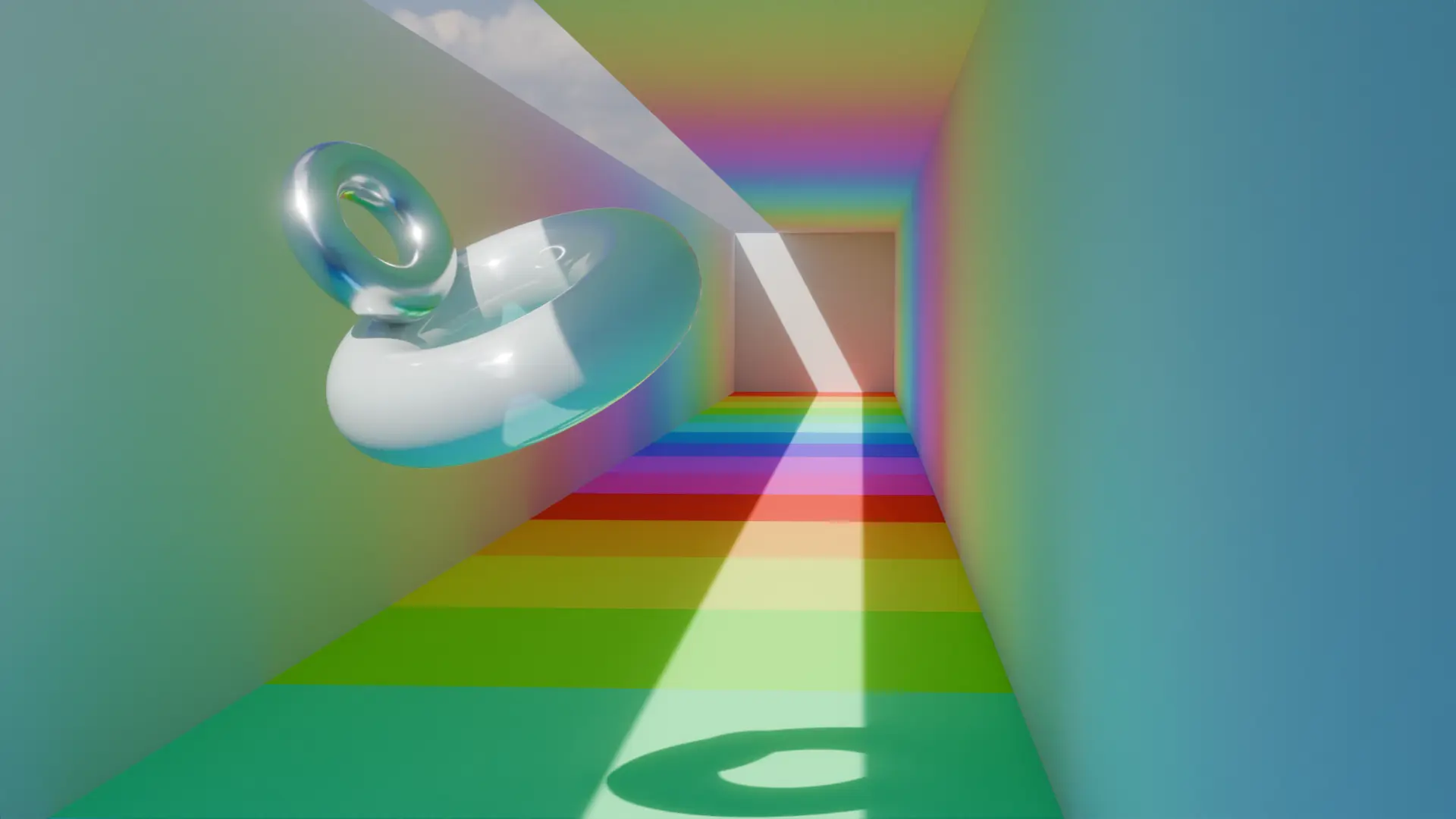

the 'look, we have global illumination!' box

I've often seen this sort of thing in videos advertising GI in minecraft shaders, and tried it out in blender.